Today I have an overview of the new developments in the Docker ecosystem. I’ll explain briefly what Docker is and how it is evolving from a tool to package applications and easily distribute them to a set of tools to orchestrate and manage loosely or tightly coupled cloud solutions.

If you prefer pretty images and my “radio voice” (cough) you can click play here, otherwise keep reading below.

What is Docker?

If you’re not familiar with the Docker explosion in recent months or in the last couple of years, let me give you a brief overview about what Docker is.

The scope of the project is ever-expanding but it can be summarized as being more or less four concepts:

- Docker is a common format, a packaging format for cloud applications.

- It defines a lightweight way to link applications and containers together. Once you have packaged your application you can expose the ports and volumes that the application uses to other containers or to the outer host.

- Docker is a way to cache all the steps needed to build your application. The community has produced ready made technology stacks and this facility also gives a fast upgrade path for your own applications.

- Docker provides a central registry a global name space where to store – if you want – your applications. You can also host your own private registry if you need it.

Containers vs Virtual Machines

The above is one way to define what Docker is. There’s another way which is contrasting it with virtual machines.

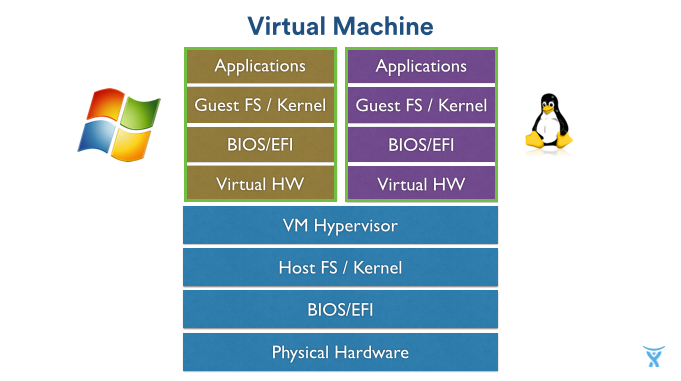

A very popular choice in modern cloud based development and deployment is the practice of packaging an application and install it inside a virtual machine. It’s an efficient model but it has drawbacks.

The main drawback being that you’re always booting an entire virtualized operating system whenever you distribute an application. Together with your application – which might be just a few megabytes – you’re shipping a virtual Ethernet driver, an entire operating system with all the binaries and libraries, statically and dynamically linked C libraries that an operating system needs.

Maybe that’s overkill, wouldn’t you say?

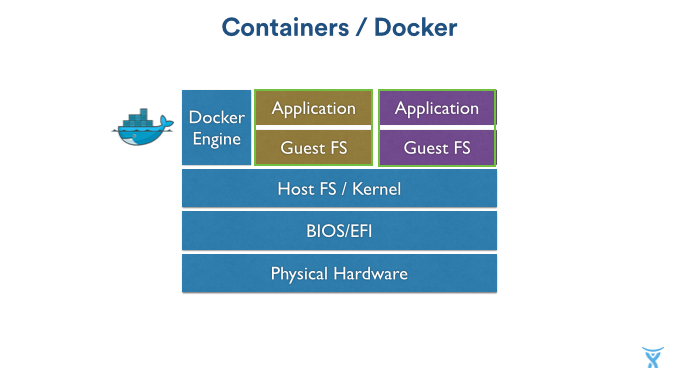

The Docker engine is a process that sits on top of a single host operating system. It manages and runs these specially packaged containers in isolation.

The container itself sees only its own process running. It has access to a special layered “copy on write” file system which isolates it from the rest of the underlying storage. The application inside the container thinks it has access to an entire machine.

Containers start up at an incredibly fast speed because they don’t need to boot up an entire operating system. You can package many more containers in a single host than you can package virtual machines.

Docker ecosystem evolving

Nowadays people are embracing Docker to go towards a micro-services architecture. For example a modern common web application has multiple components: it might have a database, front end code, serve static assets; it might need access to fast key/value stores and to relational databases.

All these components need to be managed and coordinated and linked together in a cohesive unit. The Docker ecosystem is moving towards support for these workflows.

Just a few days ago Docker maintainers announced beta versions of three new pieces of this puzzle.

The first one is Docker machine, a tool to provision environments both locally and on cloud providers with very simple and streamlined commands. I published recently a short video screen-cast on how to use docker-machine.

The second is Docker Swarm which is a cluster management solution. Once you have Docker installed on different hosts, you can control them as a unit and you can use constraints to automatically balance and deploy applications to your Docker Swarm.

The third part is Docker Compose, formerly known as Fig, used to describe how the components of your applications should be linked together.

If you want to see a few brief examples and configuration for all of the above watch the video below. Here’s some timed links to skip to the sections you care about:

Full video

Conclusions

With these three pieces of the puzzle Docker becomes much more than just an application format. You now have the tool to install Docker on many hosts. The tool to declaratively deploy your applications on a cluster and describe how your applications should be linked together. If you put these together you get a very nice and compelling abstraction over the cloud infrastructure, which is fantastic.

It’s early days for these new tools. The Docker system is moving really fast but the promise really big. I’m very excited by these new developments. I hope you are too and have found this useful.

Thanks for reading and let us know what you think at @atlassiandev or at @durdn.