Thanks to the flawless organisation of the GitHub team, Git Merge 2015 happened last week in Paris. It hosted the Git Core Contributor Summit on the first day, one day of Git talks and the celebration of Git’s 10th anniversary on the second. I felt very humbled and fortunate to participate in the first day and to witness real discussions amongst the core contributors. Here’s a few notes I took during the discussions of the core team.

Keynote of Junio C. Hamano (chief maintainer of Git)

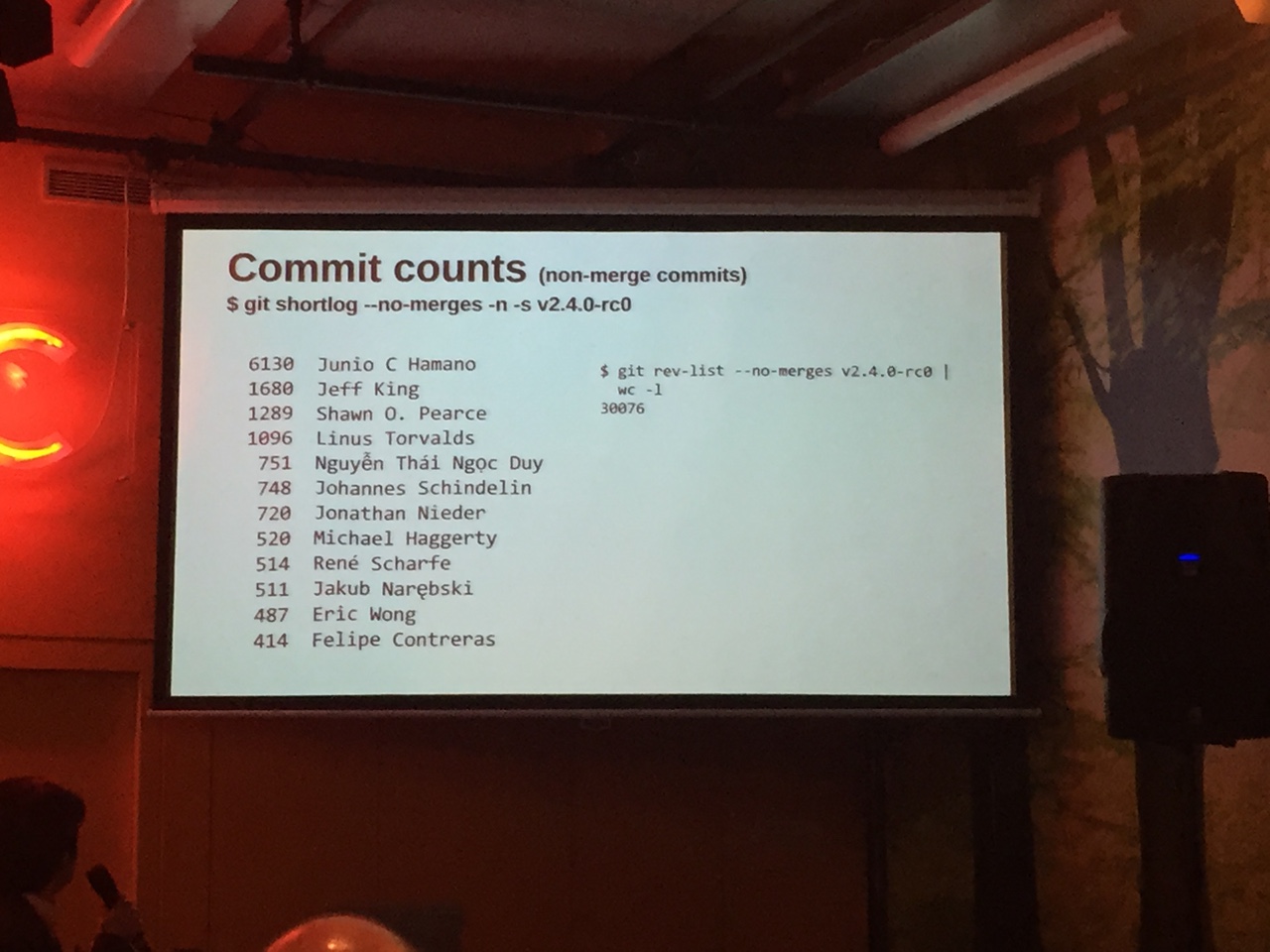

Junio C. Hamano – chief maintainer of Git – gave the opening presentation. He went through the entire history of the Git repository, slicing it and dicing it to highlight different contributors.

In his words not all commits are of equal value, you can’t just count the number of commits or count the lines of code to see who contributed more to the project. He used all sorts of cool commands to find interesting stats and all the names of people doing important contributions.

For example translators did generally few commits but wrote many many lines of very valuable content.

Then we went on asking: how valuable is a contribution that gets superseded later by a different implementation? This tells us commit counts don’t mean that much. Also people who borrowed code from other code bases have been instrumental and valuable, even though they have few commits. He praised the contributors who wrote the manual.

He also noted that line counts skew results towards finishing touches. What if someone had a brilliant idea that got shadowed by many refinements and now appears as though someone else wrote that part?

And finally he answered the question: Have we gotten rid of the original Git? Apparently only 0.2% of the original Git source code is left at the tip of Git’s master branch. To get to this stat he ran blame backwards and read the result in reverse. If my notes are right 195 files from early version are still in Git’s code base today. The most stable and untouched part of the code base is the index concept which is in the cache source file.

Important contributors are also bug reporters, feature wishers, reviewers and mentors, alternative implementors, trainers and evangelists.

He also praised the new initiative of creating a community owned newsletter “Git Rev News” to summarise the work of the mailing list and share the most relevant article and links from the Git sphere amongst enthusiasts and users alike.

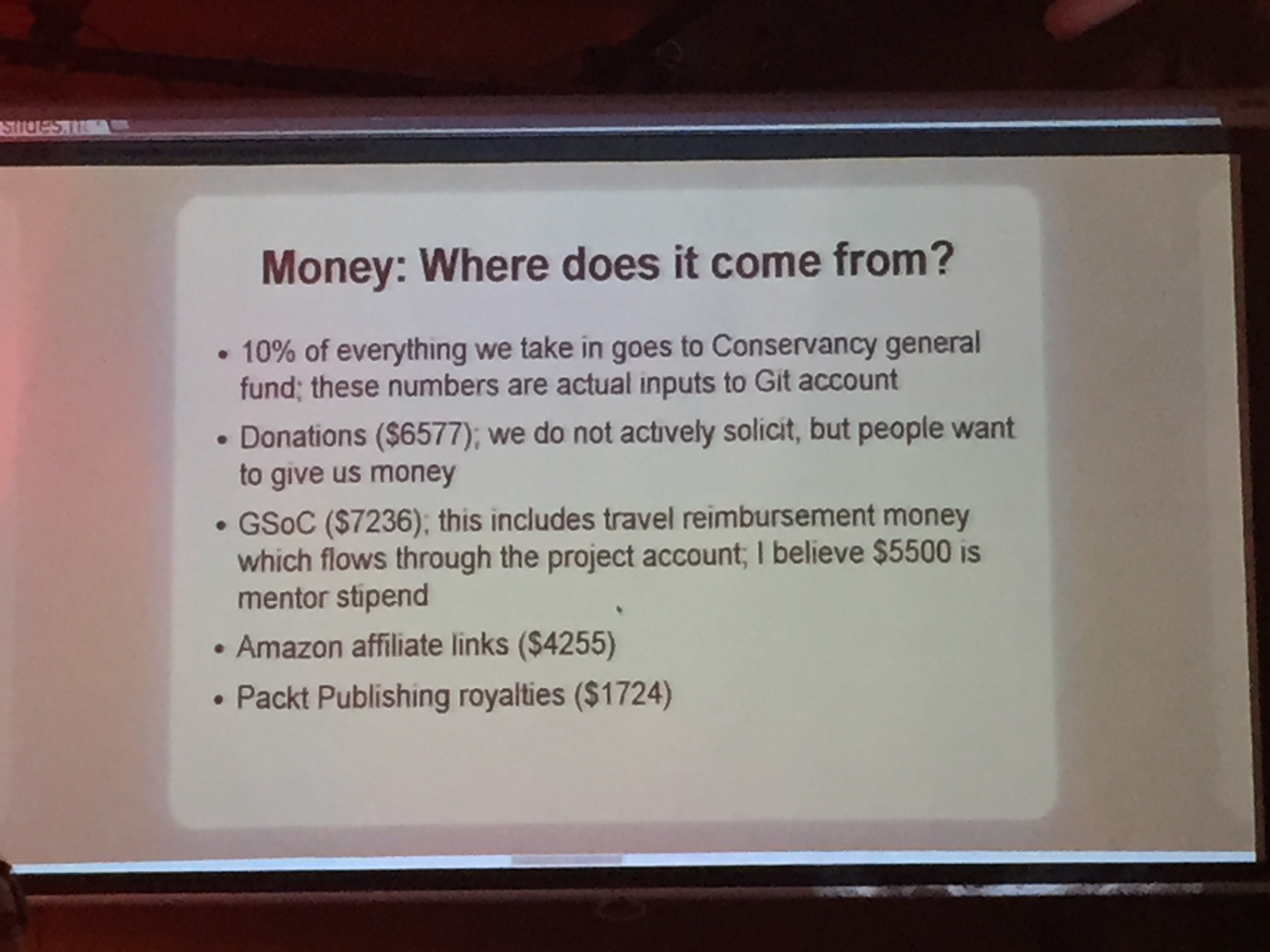

Jeff King on Software Freedom Conservancy

Jeff King gave a talk on Software Freedom Conservancy and the governance and income of the Git project. Right now there is no formal governance structure but he asked if the community felt a committee or a more formal organisation was needed. It was felt the current structure is working well. His talk and notes are here.

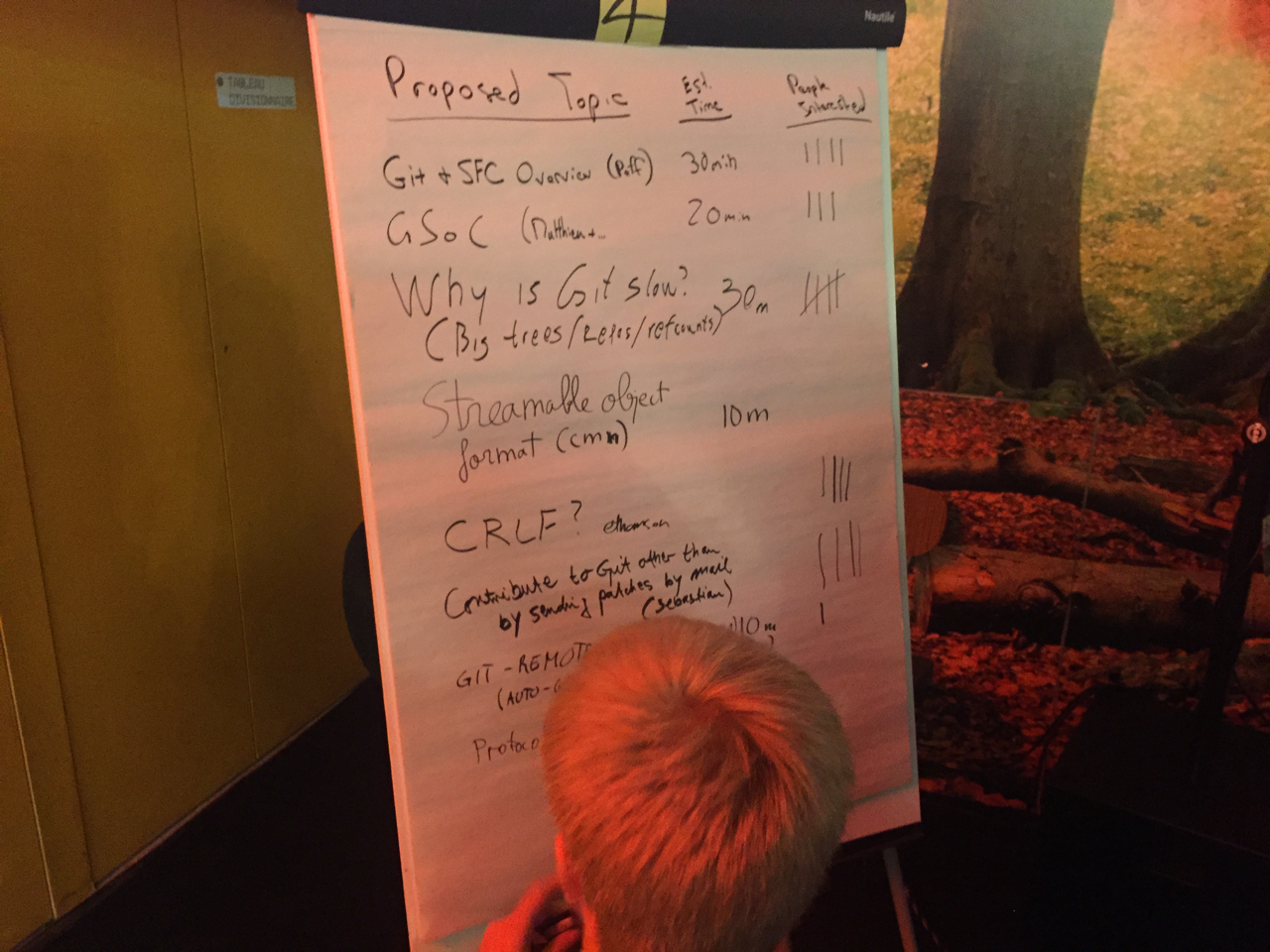

The rest of Contributor’s Summit followed an unconference style with people bringing up topics they wanted to discuss amongst the contributors.

Why is Git slow? How do we make Git faster?

Next up Jeff King and Ævar Arnfjörð Bjarmason lead a deep discussion on “Why is Git slow?” and what can be done do about it?

Repositories can be very large in the number of bytes, but that can come from having large blobs. But once repositories have a large number of commits and trees – like Mozilla’s repository or FreeBSD ports’ tree – performance degrades sensibly. The latter has a tree with 50k entries. Then there are repositories that are not big but have a lot of refs which perform very poorly. It was reported that some people using Gerrit have 300k refs.

Jeff said that at GitHub they have 10s of millions of refs as ones are created each time a repository is forked because of the way the organize their back-end.

Ævar reported that Booking.com has 600k commits with 1.5Gb of text files and they used to have 150k refs. The latter number was caused by deployments creating tags. The immediate issue they had was solved by having a sliding window maintaining only 5k refs for tags. git pull was taking 15 seconds due to the number of refs. A pull would take 15s and under a second was in the actual data transfer.

It’s really hard to tell users not to do something. Reading refs is slow. The contributors debated ways to solve the issue:

- Implement an alternate on disk strategy to store refs.

- Rewrite the refs pack-file but that development process is going to be slow.

- Deleting a ref should be a time constant operation.

- Looking up a ref should be constant too but it isn’t.

- There are experimental patches that used sqlite and tdb to store/manipulate refs.

- The group agreed that changing protocol (v2, which is upcoming) would not help here.

- Improvement of refs performance is happening but it’s a slow and incremental process.

Then they made a consolidated list of things that are slow in Git:

git statusgit log- Updating refs

- Traversing the trees can be slow,

git logis generally fast but it can get slow in large repositories. Traversing has tozliball objects. Maybe a better data structure can solve this. At the time git runsrepackit could generate a fast cache. The pack index already has a list of all the sha1. git logon a particular file: doing tree diffs is slow, because for each commit they have to look at the parents.- Path specs are inefficient too. Jeff has “nasty” patches for that.

- At GitHub they do very massive ahead/behind counts for branches. They run those operations for 100 branches at a time. They wrote a special tool that does many walks in an iterations and use bitmaps.

- Another area of performance problem is storing 500Mb text files.

- They have a lot of patches that are uncooked and require cleanup.

- Someone found a infinite loop in JGit and they fixed it by running it in a thread and killing the thread off after a while.

Setting up Git to send proper email patches is complicated

Interesting topic came up next: the maintainers love discussing patches in public in the mailing list but several people lamented that it’s hard for newbies to learn to setup Git to send patches to the mailing list. A tool called patchwork was mentioned but there seemed to be consensus in building a web frontend, an unidirectional solution that would save new contributors from configuring the email sending process to contribute proper patches to the mailing list.

Getting beginner users into the git core mailing list?

Next up was a conversation on the topic of how to engage and nurture newbies and general Git users in the Git core mailing list. The group was generally happy to engage with users but it was made clear that the core mailing list is chiefly to discuss Git development, not primarily a help channel. The libgit2 team reported they use a StackOverflow tag to great effect and state clearly on their Wiki that they want people to ask questions on StackOverflow and it has been working for them. There is also a git-users mailing list on google groups which is quite active and is a better suited environment to ask beginner questions.

Git version 2 protocol discussion

Next Stefan Beller described his proposal to start work on the version 2 network protocol. Version one was not designed with a world of so many refs in mind. The core of the discussion was on how to bring the negotiation of the capabilities to the front of the protocol. The first line of the protocol should only be about compatibility.

What needs changing for version 2? They want to design a protocol that is extensible in the future. The protocol is already extensible via capabilities but right now you can’t change the initial exchange. What they want is a way to do a negotiation at the start.

Capability negotiation is the first topic and they will only cover static capabilities because they can cache the capabilities with a process like “last time I talked to you you had these capabilities now we can keep the same protocol”.

What is a v2 protocol minimal change look like? They agreed that first point is to let the client speak first. The first stab could be send the capabilities first. Dynamic capabilities like nonces for signed pushes would break caching these initial capabilities advertisements.

Streaming Objects Format

Carlos Martin brought up a proposal for a streaming objects format. For example to stream a blob out of a database can be problematic for delta-ed streams as they cannot zlib compress them. This is needed by the libgit2 team some of their backends store into databases.

Git Remote Subdir

Johan Herland from Cisco has been working on a new prototype tool, that could improve what git subtree does but allows for remote fetches. He said that submodules and novice users don’t go along and as they are in their current state I agree and have written about it before.

Other notable moments

It was fantastic for me to pick the brains of the varied group of contributors. I enjoyed talking with Roberto Tyley author of bfg and agit, two of libgit2 and libgit sharp maintainers Jeff Hostetler, Ed thomson, GitHubber Brendan Forster and many others.

It was also great to connect with GitMinutes’s host Thomas Ferris Nicolaisen and Christian Couder who invited me to help out on the grand plans for Git Rev News effort. If you haven’t read it yet edition 2 was just published!

Conclusions

All in all it was incredibly inspiring for me to be part of the conversation with the diverse and very competent mix of Git contributors. I feel proud of being part of this community in the supporting role of trainer and evangelist ;). Stay tuned for the videos of the day of talks, all sessions were of real technical depth and hard earned scaling lessons. Thanks for reading and let us know what you think at @atlassiandev or at @durdn.